Green Data Centers: Evolving to Meet AI's Growing Data Needs

Although data centres have existed since the advent of computing, the rise of cloud computing and the widespread adoption of AI, along with other digital services, have led to an exponential demand for larger and preferably sustainable data centres.

Vineeth Nair

TL;DR:

Data centres for the uninitiated: As the world embraces the transformative potential of AI, the demand for computational power is skyrocketing. At the heart of this technological revolution are data centres, which provide the essential infrastructure to fuel AI’s insatiable appetite for data processing and storage. These, sometimes gigantic, facilities are the backbone of the digital age, enabling the flow of information and facilitating the complex calculations that drive not just AI systems but other critical technologies like cloud computing, 5G, IoT, and big data analytics as well.

Challenges: Scaling data centres to meet AI’s demands brings forth various challenges. We deep-dive into these challenges, which include addressing energy consumption needs, water usage, carbon emissions, and managing e-waste.

Solutions: We explore emerging technologies and investment trends that are driving the evolution of data centres towards greater sustainability and efficiency.

Future outlook: We are optimistic about the ‘green’ future of data centers. We provide our perspective on the evolution of data centers in both the short and long term, highlighting the technologies that we find most exciting.

Let us help you decode what Elon Musk meant when he said, “I’ve never seen any technology advance faster than this… the next shortage will be electricity. They won’t be able to find enough electricity to run all the chips. I think next year, you’ll see they just can’t find enough electricity to run all the chips!” or when Sam Altman said that an energy breakthrough is necessary for future artificial intelligence, which will consume vastly more power than people have expected. Let’s dive into what data centres look like today, understand the landscape, and what will be needed to manage their impact on the environment.

Introduction: So What Exactly Are Data Centres & Why Do We Need Them?

Short answer: Data storage and computational power!

Imagine you start an internet company, and it generates data that needs to be saved, processed, and retrieved. Over time, as more data is generated and the number of users increases, your in-house servers can no longer meet the demand. This is where data centres come in. Data centres provide a safe and scalable environment to store large quantities of data, accessible and monitored around the clock. In essence, data centres are concentrations of servers — the industrial equivalent of personal computers — designed to collect, store, process, and distribute vast amounts of data. Numerous such clustered servers are housed inside rooms, buildings, or groups of buildings. They offer several key advantages for businesses: scalability, reliability, security, and efficiency within a single suite.

As you can imagine, the total amount of data generated to date is immense, to the tune of 10.1 zettabytes (ZB) as of 2023! To put this into perspective, assuming one hour of a Netflix movie equals 1GB of data, the total data generated equates to 10.1 trillion hours of viewing or 1.1 billion years!

Data centres are the unsung heroes that provide the infrastructure to store, retrieve and process most of this data.

“Everything on your phone is stored somewhere within four walls.”

(New York Times)

The design and infrastructure of data centres continue to evolve to meet the needs of modern computational and data-intensive tasks. Now, let’s take you inside a data centre.

What’s Inside the Box?

Data centres can differ depending on the type/purpose they’re built for but a data centre is fundamentally comprised of 4 major components: compute, storage, network, and the supporting infrastructure.

Computing Infrastructure

Servers are the engines of the data centre. They perform the critical tasks of processing and managing data. Data centres must use processors best suited for the task at hand; for example, general-purpose CPUs may not be the optimal choice for solving artificial intelligence (AI) and machine learning (ML) problems. Instead, specialised hardware such as GPUs and TPUs are often used to handle these specific workloads efficiently.

Storage Infrastructure

Data centres host large quantities of information, both for their own purposes and for the needs of their customers. Storage infrastructure includes various types of data storage devices such as hard drives (HDDs), solid-state drives (SSDs), and network-attached storage (NAS) systems.

Network Infrastructure

Network equipment includes cabling, switches, routers, and firewalls that connect servers together and to the outside world.

Support Infrastructure

Power Subsystems — uninterruptible power supplies (UPS) and backup generators to ensure continuous operation during power outages.

Cooling Systems — Air conditioning and other cooling mechanisms prevent overheating of equipment. Cooling is essential because high-density server environments generate a significant amount of heat.

Fire Suppression Systems: These systems protect the facility from fire-related damages.

Building Security Systems: Physical security measures, such as surveillance cameras, access controls, and security personnel, safeguard against unauthorized access and physical threats.

AI applications generate far more data than other workloads, necessitating significant data centre capacities. The total amount of data generated is expected to grow to 21 ZB by 2027, driven largely by the adoption of AI! This demand has led to the construction of specialised data centres designed specifically for high-performance computing. These centres are different from traditional ones, as they require more densely clustered and performance-intensive IT infrastructure, generating much more heat and requiring huge amounts of power. Large language models like OpenAI’s GPT-3, for instance, use over 1,300 megawatt-hours (MWh) of electricity to train — roughly 130 US homes’ worth of electricity annually. Traditional data centres are designed with 5 to 10 kW/rack as an average density; whereas those needed for AI require 60 or more kW/rack.

Rack density refers to the amount of computing equipment installed and operated within a single server rack.

Higher rack density exacerbates the cooling demands within data centres because concentrated computing equipment generates substantial heat. Since cooling typically accounts for roughly 40% of an average data centre’s electricity use, operators need innovative solutions to keep the systems cool at low costs.

Market Outlook

At present, there are more than 8,000 data centres globally and it is interesting to note that approximately 33% of these are located in the United States and only about 16% in Europe.

As discussed, the surge in AI applications underscores the urgency for data centres to accommodate AI’s hefty compute requirements. Industry experts project a need for approximately 3,000 new data centres across Europe by 2025. They also anticipate global data centre capital expenditure to exceed US$500 billion by 2027, primarily driven by AI infrastructure demands. That translates to a stress on energy infrastructure with energy companies increasingly citing AI power consumption as a leading contributor to new demand. For instance, a major utility company recently noted that data centres could comprise up to 7.5% of total U.S. electricity consumption by 2030!

While AI is a major driver of this demand, the following are other key drivers

Cloud infrastructure — The shift towards cloud computing by businesses of all sizes with reducing costs

5G and IoT Expansion contribute to massive amounts of data that need processing and storage, further fuelling the need for data centres

Regulatory Requirements — Data privacy and security regulations are compelling organisations to invest in secure and compliant data storage solutions

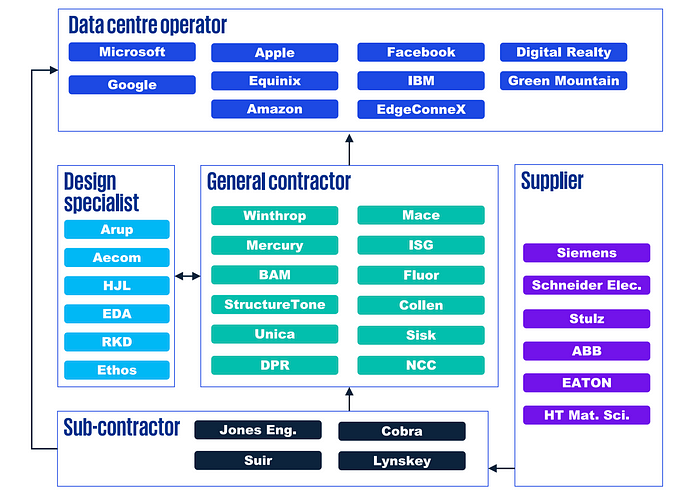

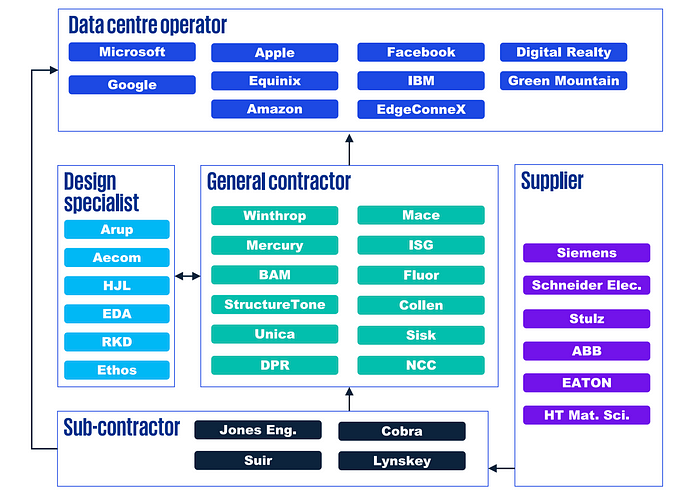

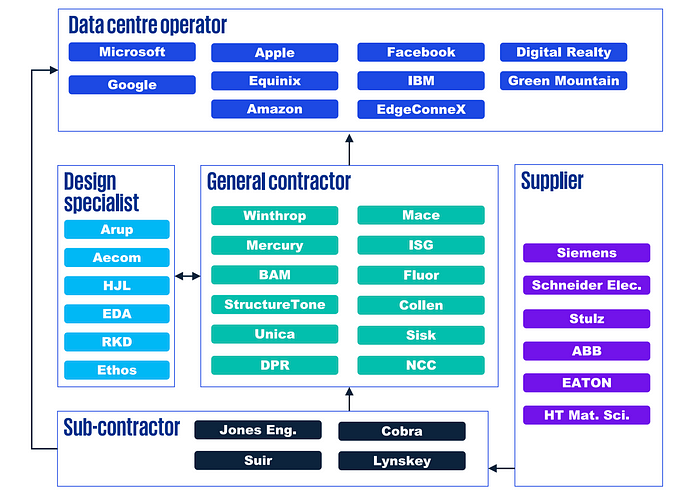

While the types of data centres are beyond the scope of this article, here are a few basics to understand the value chain

Value chain: The beneficiaries of the boom in data centers are many with Big Tech being a major one

In the next five years, consumers and businesses are expected to generate twice as much data as all the data created over the past ten years!

Soo…. What’s the problem?

The hidden cost of AI and opportunities

Despite their benefits, there are costs associated with running massive data centres — significant costs — environmental costs!! We have divided these impacts into 4 key dimensions: Electricity, Water, Emissions and lastly E-waste.

Electricity

According to the IEA report, data centre electricity use in Ireland has more than tripled since 2015, accounting for 18% of total electricity consumption in 2022. In Denmark, electricity usage from data centres is forecast to grow from 1% to 15% of total consumption by 2030

As mentioned, running servers consumes substantial electricity. Data centres accounted for 2% of global electricity use in 2022 according to the IEA. In the US alone, the electricity consumption due to data centres in 2022 was 4% of the total demand and it is expected to grow to about 6% of total demand to up to 260TWh by 2026.

To emphasise on AI’s impact on the electricity demand, it is important to point out that not all queries are made equal! Generative AI queries consume energy at 4 or 5 times the magnitude of a typical search engine request. For example, a typical Google search requires 0.3 Wh of electricity, whereas OpenAI’s ChatGPT requires 2.9 Wh per request. With 9 billion searches daily, this could require almost 10 TWh of additional electricity annually!

Cooling servers also demands significant energy. It is alarming to note that cloud computing players often do not report water consumption. The split of energy usage within data centres has traditionally been — 40% consumed by computing activities, another 40% is used for cooling purposes, and the remaining 20% is used for running other IT infrastructure. These percentages highlight the major areas that need improvement. Depending on the pace of deployment, efficiency improvements, and trends in AI and cryptocurrency, the global electricity consumption of data centres is expected to range between 620–1,050 TWh by 2026, up from 460 TWh in 2022.

The sudden boom in the cryptocurrency space over the past 5 years has resulted in significant resources being diverted to mining

The share of electricity demand from data centers, as a percentage of total electricity demand, is estimated to increase by an average of one percentage point in major economies

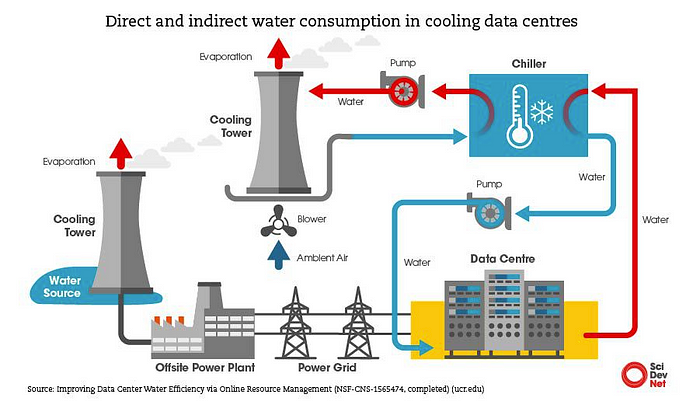

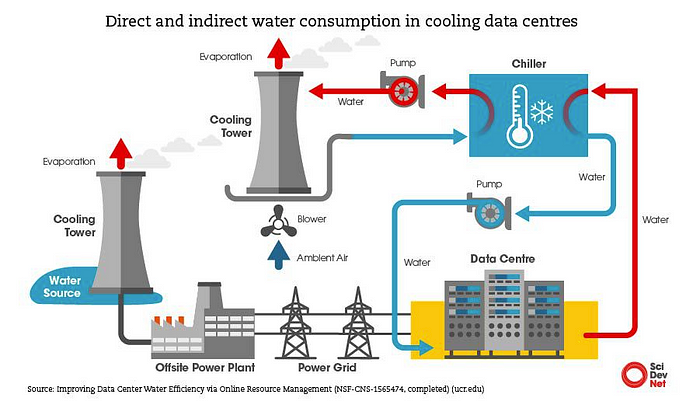

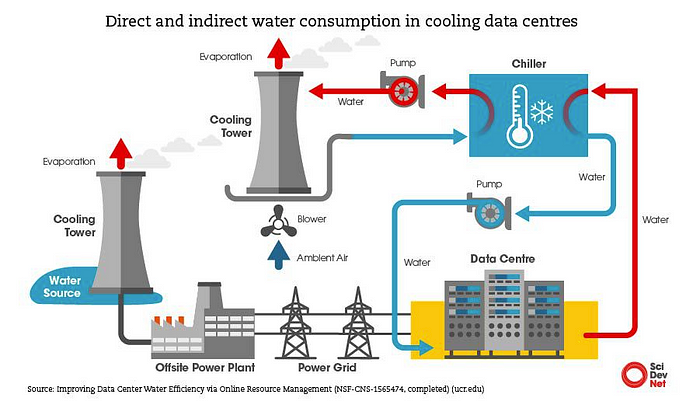

Water

In the US alone, data centres consume up to 1.7 billion litres of water per day for cooling. Training GPT-3 in Microsoft’s data centres, for example, can consume a total of 700,000 litres of clean freshwater. And while most data centres do not release data about their water consumption requirements, conventional data centres can consume significant potable water annually, with an example figure being 464,242,900 litres. Water consumption has increased by more than 60% in the last four years in some major firms. The median water footprint for internet use is estimated at 0.74 litres per GB, underscoring the relevance of water consumption!

Carbon Emissions

The data centre industry accounts for roughly 2% of global greenhouse gas emissions. The IT sector, including data centres, accounts for about 3–4% of global carbon emissions, about twice as much as the aviation industry’s share. And just for perspective on what a future where AI permeates into all walks of life holds, it is critical to note that while we have come a long way and progress is being made each day, training a single deep-learning model can emit up to 284,000 kg of CO2, equivalent to the lifetime emissions of five cars, while training earlier chatbot models such as GPT-3 led to the production of 500 metric tons of greenhouse gas emissions — equivalent to about 1 million miles driven by a conventional gasoline-powered vehicle. The model required more than 1,200 MWh during the training phase — roughly the amount of energy used in a million American homes in one hour. It is safe to assume that future iterations have even greater needs and generate higher carbon emissions.

The diverse nature of AI workloads adds complexity. While training demands cost efficiency and less redundancy, inference requires low latency and robust connectivity. The carbon footprint of text queries is the lowest among all GenAI tasks. Food for thought for the next time you generate images for fun😬

E-waste

Rapid hardware obsolescence leads to substantial electronic waste. Global e-waste reached 53.6 million metric tons in 2019, with data centres contributing significantly. The primary reason is that the lifetime of the equipment impacts the frequency of replacements. Servers typically get replaced every 3 to 5 years, leading to spikes in emissions.

The environmental impact of data centres, particularly in the context of increasing AI and computational demands, is substantial. Addressing these challenges requires a multifaceted approach, including technological innovation, regulatory measures, and collaborative efforts. As the digital world continues to expand, the importance of sustainable practices in data centre operations becomes ever more critical. Governments are implementing measures to regulate data centre growth. For example, Singapore has imposed a temporary moratorium on new data centre construction in certain regions. Collaboration between data centre operators, real estate developers, governments, and technology providers is crucial for developing future-ready, energy-efficient, and environmentally responsible data centre infrastructure.

Areas of Innovation and The Frontrunners

Addressing the varying requirements for AI necessitates innovative hybrid solutions that combine traditional and AI-optimised designs to future-proof data centres.

We have clubbed the startups and solutions into 5 major categories -

Software and Infrastructure Solutions

Enhanced AI algorithms enable more impactful dynamic resource allocation to optimize energy management in real-time. Software solutions allow operators to temporarily shift power loads with carbon-aware models that relocate data centre workloads to regions with lower carbon intensity at selected times. It comes as no surprise that companies like Nvidia are developing AI-driven solutions to optimise data centre operations that can significantly reduce energy consumption. Future Facilities Ltd(acquired by Cadence), is using AI and “digital twin” technology to help organisations design more energy-efficient systems and Paris-based FlexAI(est. 2023), that recently came out of stealth and raised a $30M seed round(investors include Elaia Partners, First Capital and Partech), is developing operating systems to help AI developers design systems that achieve more performance with less energy usage. Coolgradient (est. 2021, backed by 4impact Capital) is developing intelligent data centre optimization software to help reduce energy consumption and increase efficiency.

Federated learning is a decentralized approach to training machine learning models across multiple devices or servers holding data samples, without directly exchanging the raw data itself. Unlike traditional centralized machine learning where all data is aggregated on a central server for model training, federated learning keeps the data distributed and trains models locally on each device or server. A central server periodically aggregates the model updates from the distributed clients to form a global model. Companies like Bitfount are developing federated platforms that enable this decentralized collaborative training of AI models without compromising data privacy. Their technology allows algorithms to travel to datasets instead of raw data being shared. This federated approach distributes the compute load across many devices and can significantly reduce the overall energy footprint compared to centralized training in the cloud.

Advanced Hardware Solutions

It’s not just the design of data centres that can be made more energy-efficient. There seems to be a gap in innovation on the chip side as well(for AI use specifically) partly because the processors that are used for AI training — GPUs — were originally designed for a different use-case: real-time rendering of computer-generated graphics in video games.

Addressing the challenge of improving energy efficiency, UK-based Lightmatter(est. 2017, investors include Sequoia and Hewlett Packard Pathfinder) is developing new chips using photonics technology to build processors specifically for AI development, which use less energy than traditional GPUs. Companies like Lumai, Lumiphase and Xscape Photonics(est. 2022, backed by Life Extention Ventures) are working on similar innovations. Celestial AI(est. 2020, backed by major funds such as Engine Ventures), to serve AI and machine learning applications, has developed a “photonic fabric” technology that could increase chiplet efficiency and helps push the limits of traditional data centres.

Addressing the problem of achieving more performance output for every watt of input electricity from a memory compression standpoint, a German startup ZeroPoint Technologies (est 2015, backed by Matterwave Ventures and Climentum) has proven that its technology effectuates a 25% reduction in cost of ownership for datacenter players via a massive decrease for electricity need — and hence CO2 emissions.

Cooling technologies such as direct-to-chip and liquid cooling are gaining traction as efficient ways to dissipate heat and reduce energy consumption. Impact Cooling(est. 2022, backed by Impact Science Ventures) uses air jet impingement for heat transfer to reduce energy consumption and carbon footprint. Innovations such as rear-door heat exchangers are being adopted to address high-density environments. Startups like Submer (est. 2015, investors include Norrsken VC, Planet First Partners and Mundi Ventures), by leveraging on immersion cooling technology, offer solutions that can reduce cooling energy consumption by up to 95%.

Taalas (est. 2023, backed by Quiet Capital and Pierre Lamond) is developing a platform that turns AI models into custom silicon — hence creating a ‘Hardocre Model’ which results in 1000x efficiency improvement over its software counterpart.

Alternate power-sourcing solutions

Nuclear Power: Plans for nuclear-powered data centres, utilising small modular reactors (SMRs), are underway, with commissioning dates envisaged for 2030. Microsoft and Amazon are already integrating nuclear power into their data centres. Microsoft’s partnership with Constellation Energy to supply one of its data centres with nuclear power is one such example.

Green-hydrogen: ECL, a California-based startup(backed by Molex and Hyperwise Ventures) that develops modular cost-effective green-hydrogen-powered data centres, recently raised their Seed round to develop their first zero-emission data centre

Wind & Solar: Companies are now building data centres closer to renewable energy sources, such as wind and solar farms. WindCORES, a brand of the German wind energy company WestfalenWIND has placed data centres inside (quite literally!) windmills to have access to clean energy! Exowatt, a Paris-based startup that raised a whopping $20M series-A last month (investors including Andreessen Horowitz, Atomic Labs and Sam Altman), is using thermal energy collection+storage solutions to run green data centres

Data centre operators are also exploring alternative power-sourcing strategies such as natural gas. For example, Lowercarbon Capital-backed Crusoe uses natural gas that would otherwise be released into the atmosphere, to power its data centres.

‘Out of the World’ Solutions

Underwater Data Centres: Startups like Network Ocean(est. 2023, backed by Climate Capital), Orca X and Subsea Cloud are developing data centres that are submerged in water to leverage natural cooling.

Lunar Data Centres: Startups like Lonestar (est. 2018, backed by Scout Ventures) are working on developing small data centres on the moon, which could take advantage of abundant solar energy and would be less susceptible to natural disasters.

Space Data Centres: Thales Alenia Space is studying the feasibility of space-based data centres that would run on solar energy.

Provocative Science(est. 2023, backed by Unbounded Capital) is taking a completely alternate route by using the heat generated in data centres to run DAC!

Conclusion and Future Outlook

As the demand for data storage and computational power continues to rise, driven by AI, the need for more and larger data centres will grow exponentially. The energy consumption of these facilities presents a significant challenge, necessitating innovations in efficiency and sustainable practices. Data centres are set to become even more integral to the global digital infrastructure, and the industry must adapt to meet these increasing demands responsibly and sustainably. Even if the AI industry improves data centre energy efficiency by 10%, doubling the number of data centres would still lead to an 80% increase in global carbon emissions.

As a thesis-driven fund, we believe in backing category-defining companies and in the realm of data centres, we see two different futures emerging — one from a short-term perspective and another from a long-term, aspirational standpoint. In the short term, we are particularly interested in novel solutions that leverage a combination of renewables and long-duration energy storage (LDES). Additionally, we believe that making fundamental changes, even if small, can have a significant impact. Examples include hardware solutions that tweak chip architectures or improved cooling systems.From a long-term perspective, we see deep-tech solutions, such as advanced chips designed specifically for the future, as highly promising. The future of AI and computational technology hinges on robust data centre infrastructure. Addressing environmental and operational challenges is crucial. Innovations in renewable energy, cooling technologies, and AI-driven optimisations promise a more sustainable and efficient future for data centres.That said, we strongly believe in the resilience of our species. If data centres are fundamentally required, we will find ways to make them sustainable (case in point — Ethereum, the second-largest cryptocurrency by market cap, has successfully reduced its electricity requirements by an impressive 99%!!)

We’re very excited about this space, and if you’re a founder building in this sector, we extend our warm invitation to engage with us. We’d love to discuss investment opportunities!

TL;DR:

Data centres for the uninitiated: As the world embraces the transformative potential of AI, the demand for computational power is skyrocketing. At the heart of this technological revolution are data centres, which provide the essential infrastructure to fuel AI’s insatiable appetite for data processing and storage. These, sometimes gigantic, facilities are the backbone of the digital age, enabling the flow of information and facilitating the complex calculations that drive not just AI systems but other critical technologies like cloud computing, 5G, IoT, and big data analytics as well.

Challenges: Scaling data centres to meet AI’s demands brings forth various challenges. We deep-dive into these challenges, which include addressing energy consumption needs, water usage, carbon emissions, and managing e-waste.

Solutions: We explore emerging technologies and investment trends that are driving the evolution of data centres towards greater sustainability and efficiency.

Future outlook: We are optimistic about the ‘green’ future of data centers. We provide our perspective on the evolution of data centers in both the short and long term, highlighting the technologies that we find most exciting.

Let us help you decode what Elon Musk meant when he said, “I’ve never seen any technology advance faster than this… the next shortage will be electricity. They won’t be able to find enough electricity to run all the chips. I think next year, you’ll see they just can’t find enough electricity to run all the chips!” or when Sam Altman said that an energy breakthrough is necessary for future artificial intelligence, which will consume vastly more power than people have expected. Let’s dive into what data centres look like today, understand the landscape, and what will be needed to manage their impact on the environment.

Introduction: So What Exactly Are Data Centres & Why Do We Need Them?

Short answer: Data storage and computational power!

Imagine you start an internet company, and it generates data that needs to be saved, processed, and retrieved. Over time, as more data is generated and the number of users increases, your in-house servers can no longer meet the demand. This is where data centres come in. Data centres provide a safe and scalable environment to store large quantities of data, accessible and monitored around the clock. In essence, data centres are concentrations of servers — the industrial equivalent of personal computers — designed to collect, store, process, and distribute vast amounts of data. Numerous such clustered servers are housed inside rooms, buildings, or groups of buildings. They offer several key advantages for businesses: scalability, reliability, security, and efficiency within a single suite.

As you can imagine, the total amount of data generated to date is immense, to the tune of 10.1 zettabytes (ZB) as of 2023! To put this into perspective, assuming one hour of a Netflix movie equals 1GB of data, the total data generated equates to 10.1 trillion hours of viewing or 1.1 billion years!

Data centres are the unsung heroes that provide the infrastructure to store, retrieve and process most of this data.

“Everything on your phone is stored somewhere within four walls.”

(New York Times)

The design and infrastructure of data centres continue to evolve to meet the needs of modern computational and data-intensive tasks. Now, let’s take you inside a data centre.

What’s Inside the Box?

Data centres can differ depending on the type/purpose they’re built for but a data centre is fundamentally comprised of 4 major components: compute, storage, network, and the supporting infrastructure.

Computing Infrastructure

Servers are the engines of the data centre. They perform the critical tasks of processing and managing data. Data centres must use processors best suited for the task at hand; for example, general-purpose CPUs may not be the optimal choice for solving artificial intelligence (AI) and machine learning (ML) problems. Instead, specialised hardware such as GPUs and TPUs are often used to handle these specific workloads efficiently.

Storage Infrastructure

Data centres host large quantities of information, both for their own purposes and for the needs of their customers. Storage infrastructure includes various types of data storage devices such as hard drives (HDDs), solid-state drives (SSDs), and network-attached storage (NAS) systems.

Network Infrastructure

Network equipment includes cabling, switches, routers, and firewalls that connect servers together and to the outside world.

Support Infrastructure

Power Subsystems — uninterruptible power supplies (UPS) and backup generators to ensure continuous operation during power outages.

Cooling Systems — Air conditioning and other cooling mechanisms prevent overheating of equipment. Cooling is essential because high-density server environments generate a significant amount of heat.

Fire Suppression Systems: These systems protect the facility from fire-related damages.

Building Security Systems: Physical security measures, such as surveillance cameras, access controls, and security personnel, safeguard against unauthorized access and physical threats.

AI applications generate far more data than other workloads, necessitating significant data centre capacities. The total amount of data generated is expected to grow to 21 ZB by 2027, driven largely by the adoption of AI! This demand has led to the construction of specialised data centres designed specifically for high-performance computing. These centres are different from traditional ones, as they require more densely clustered and performance-intensive IT infrastructure, generating much more heat and requiring huge amounts of power. Large language models like OpenAI’s GPT-3, for instance, use over 1,300 megawatt-hours (MWh) of electricity to train — roughly 130 US homes’ worth of electricity annually. Traditional data centres are designed with 5 to 10 kW/rack as an average density; whereas those needed for AI require 60 or more kW/rack.

Rack density refers to the amount of computing equipment installed and operated within a single server rack.

Higher rack density exacerbates the cooling demands within data centres because concentrated computing equipment generates substantial heat. Since cooling typically accounts for roughly 40% of an average data centre’s electricity use, operators need innovative solutions to keep the systems cool at low costs.

Market Outlook

At present, there are more than 8,000 data centres globally and it is interesting to note that approximately 33% of these are located in the United States and only about 16% in Europe.

As discussed, the surge in AI applications underscores the urgency for data centres to accommodate AI’s hefty compute requirements. Industry experts project a need for approximately 3,000 new data centres across Europe by 2025. They also anticipate global data centre capital expenditure to exceed US$500 billion by 2027, primarily driven by AI infrastructure demands. That translates to a stress on energy infrastructure with energy companies increasingly citing AI power consumption as a leading contributor to new demand. For instance, a major utility company recently noted that data centres could comprise up to 7.5% of total U.S. electricity consumption by 2030!

While AI is a major driver of this demand, the following are other key drivers

Cloud infrastructure — The shift towards cloud computing by businesses of all sizes with reducing costs

5G and IoT Expansion contribute to massive amounts of data that need processing and storage, further fuelling the need for data centres

Regulatory Requirements — Data privacy and security regulations are compelling organisations to invest in secure and compliant data storage solutions

While the types of data centres are beyond the scope of this article, here are a few basics to understand the value chain

Value chain: The beneficiaries of the boom in data centers are many with Big Tech being a major one

In the next five years, consumers and businesses are expected to generate twice as much data as all the data created over the past ten years!

Soo…. What’s the problem?

The hidden cost of AI and opportunities

Despite their benefits, there are costs associated with running massive data centres — significant costs — environmental costs!! We have divided these impacts into 4 key dimensions: Electricity, Water, Emissions and lastly E-waste.

Electricity

According to the IEA report, data centre electricity use in Ireland has more than tripled since 2015, accounting for 18% of total electricity consumption in 2022. In Denmark, electricity usage from data centres is forecast to grow from 1% to 15% of total consumption by 2030

As mentioned, running servers consumes substantial electricity. Data centres accounted for 2% of global electricity use in 2022 according to the IEA. In the US alone, the electricity consumption due to data centres in 2022 was 4% of the total demand and it is expected to grow to about 6% of total demand to up to 260TWh by 2026.

To emphasise on AI’s impact on the electricity demand, it is important to point out that not all queries are made equal! Generative AI queries consume energy at 4 or 5 times the magnitude of a typical search engine request. For example, a typical Google search requires 0.3 Wh of electricity, whereas OpenAI’s ChatGPT requires 2.9 Wh per request. With 9 billion searches daily, this could require almost 10 TWh of additional electricity annually!

Cooling servers also demands significant energy. It is alarming to note that cloud computing players often do not report water consumption. The split of energy usage within data centres has traditionally been — 40% consumed by computing activities, another 40% is used for cooling purposes, and the remaining 20% is used for running other IT infrastructure. These percentages highlight the major areas that need improvement. Depending on the pace of deployment, efficiency improvements, and trends in AI and cryptocurrency, the global electricity consumption of data centres is expected to range between 620–1,050 TWh by 2026, up from 460 TWh in 2022.

The sudden boom in the cryptocurrency space over the past 5 years has resulted in significant resources being diverted to mining

The share of electricity demand from data centers, as a percentage of total electricity demand, is estimated to increase by an average of one percentage point in major economies

Water

In the US alone, data centres consume up to 1.7 billion litres of water per day for cooling. Training GPT-3 in Microsoft’s data centres, for example, can consume a total of 700,000 litres of clean freshwater. And while most data centres do not release data about their water consumption requirements, conventional data centres can consume significant potable water annually, with an example figure being 464,242,900 litres. Water consumption has increased by more than 60% in the last four years in some major firms. The median water footprint for internet use is estimated at 0.74 litres per GB, underscoring the relevance of water consumption!

Carbon Emissions

The data centre industry accounts for roughly 2% of global greenhouse gas emissions. The IT sector, including data centres, accounts for about 3–4% of global carbon emissions, about twice as much as the aviation industry’s share. And just for perspective on what a future where AI permeates into all walks of life holds, it is critical to note that while we have come a long way and progress is being made each day, training a single deep-learning model can emit up to 284,000 kg of CO2, equivalent to the lifetime emissions of five cars, while training earlier chatbot models such as GPT-3 led to the production of 500 metric tons of greenhouse gas emissions — equivalent to about 1 million miles driven by a conventional gasoline-powered vehicle. The model required more than 1,200 MWh during the training phase — roughly the amount of energy used in a million American homes in one hour. It is safe to assume that future iterations have even greater needs and generate higher carbon emissions.

The diverse nature of AI workloads adds complexity. While training demands cost efficiency and less redundancy, inference requires low latency and robust connectivity. The carbon footprint of text queries is the lowest among all GenAI tasks. Food for thought for the next time you generate images for fun😬

E-waste

Rapid hardware obsolescence leads to substantial electronic waste. Global e-waste reached 53.6 million metric tons in 2019, with data centres contributing significantly. The primary reason is that the lifetime of the equipment impacts the frequency of replacements. Servers typically get replaced every 3 to 5 years, leading to spikes in emissions.

The environmental impact of data centres, particularly in the context of increasing AI and computational demands, is substantial. Addressing these challenges requires a multifaceted approach, including technological innovation, regulatory measures, and collaborative efforts. As the digital world continues to expand, the importance of sustainable practices in data centre operations becomes ever more critical. Governments are implementing measures to regulate data centre growth. For example, Singapore has imposed a temporary moratorium on new data centre construction in certain regions. Collaboration between data centre operators, real estate developers, governments, and technology providers is crucial for developing future-ready, energy-efficient, and environmentally responsible data centre infrastructure.

Areas of Innovation and The Frontrunners

Addressing the varying requirements for AI necessitates innovative hybrid solutions that combine traditional and AI-optimised designs to future-proof data centres.

We have clubbed the startups and solutions into 5 major categories -

Software and Infrastructure Solutions

Enhanced AI algorithms enable more impactful dynamic resource allocation to optimize energy management in real-time. Software solutions allow operators to temporarily shift power loads with carbon-aware models that relocate data centre workloads to regions with lower carbon intensity at selected times. It comes as no surprise that companies like Nvidia are developing AI-driven solutions to optimise data centre operations that can significantly reduce energy consumption. Future Facilities Ltd(acquired by Cadence), is using AI and “digital twin” technology to help organisations design more energy-efficient systems and Paris-based FlexAI(est. 2023), that recently came out of stealth and raised a $30M seed round(investors include Elaia Partners, First Capital and Partech), is developing operating systems to help AI developers design systems that achieve more performance with less energy usage. Coolgradient (est. 2021, backed by 4impact Capital) is developing intelligent data centre optimization software to help reduce energy consumption and increase efficiency.

Federated learning is a decentralized approach to training machine learning models across multiple devices or servers holding data samples, without directly exchanging the raw data itself. Unlike traditional centralized machine learning where all data is aggregated on a central server for model training, federated learning keeps the data distributed and trains models locally on each device or server. A central server periodically aggregates the model updates from the distributed clients to form a global model. Companies like Bitfount are developing federated platforms that enable this decentralized collaborative training of AI models without compromising data privacy. Their technology allows algorithms to travel to datasets instead of raw data being shared. This federated approach distributes the compute load across many devices and can significantly reduce the overall energy footprint compared to centralized training in the cloud.

Advanced Hardware Solutions

It’s not just the design of data centres that can be made more energy-efficient. There seems to be a gap in innovation on the chip side as well(for AI use specifically) partly because the processors that are used for AI training — GPUs — were originally designed for a different use-case: real-time rendering of computer-generated graphics in video games.

Addressing the challenge of improving energy efficiency, UK-based Lightmatter(est. 2017, investors include Sequoia and Hewlett Packard Pathfinder) is developing new chips using photonics technology to build processors specifically for AI development, which use less energy than traditional GPUs. Companies like Lumai, Lumiphase and Xscape Photonics(est. 2022, backed by Life Extention Ventures) are working on similar innovations. Celestial AI(est. 2020, backed by major funds such as Engine Ventures), to serve AI and machine learning applications, has developed a “photonic fabric” technology that could increase chiplet efficiency and helps push the limits of traditional data centres.

Addressing the problem of achieving more performance output for every watt of input electricity from a memory compression standpoint, a German startup ZeroPoint Technologies (est 2015, backed by Matterwave Ventures and Climentum) has proven that its technology effectuates a 25% reduction in cost of ownership for datacenter players via a massive decrease for electricity need — and hence CO2 emissions.

Cooling technologies such as direct-to-chip and liquid cooling are gaining traction as efficient ways to dissipate heat and reduce energy consumption. Impact Cooling(est. 2022, backed by Impact Science Ventures) uses air jet impingement for heat transfer to reduce energy consumption and carbon footprint. Innovations such as rear-door heat exchangers are being adopted to address high-density environments. Startups like Submer (est. 2015, investors include Norrsken VC, Planet First Partners and Mundi Ventures), by leveraging on immersion cooling technology, offer solutions that can reduce cooling energy consumption by up to 95%.

Taalas (est. 2023, backed by Quiet Capital and Pierre Lamond) is developing a platform that turns AI models into custom silicon — hence creating a ‘Hardocre Model’ which results in 1000x efficiency improvement over its software counterpart.

Alternate power-sourcing solutions

Nuclear Power: Plans for nuclear-powered data centres, utilising small modular reactors (SMRs), are underway, with commissioning dates envisaged for 2030. Microsoft and Amazon are already integrating nuclear power into their data centres. Microsoft’s partnership with Constellation Energy to supply one of its data centres with nuclear power is one such example.

Green-hydrogen: ECL, a California-based startup(backed by Molex and Hyperwise Ventures) that develops modular cost-effective green-hydrogen-powered data centres, recently raised their Seed round to develop their first zero-emission data centre

Wind & Solar: Companies are now building data centres closer to renewable energy sources, such as wind and solar farms. WindCORES, a brand of the German wind energy company WestfalenWIND has placed data centres inside (quite literally!) windmills to have access to clean energy! Exowatt, a Paris-based startup that raised a whopping $20M series-A last month (investors including Andreessen Horowitz, Atomic Labs and Sam Altman), is using thermal energy collection+storage solutions to run green data centres

Data centre operators are also exploring alternative power-sourcing strategies such as natural gas. For example, Lowercarbon Capital-backed Crusoe uses natural gas that would otherwise be released into the atmosphere, to power its data centres.

‘Out of the World’ Solutions

Underwater Data Centres: Startups like Network Ocean(est. 2023, backed by Climate Capital), Orca X and Subsea Cloud are developing data centres that are submerged in water to leverage natural cooling.

Lunar Data Centres: Startups like Lonestar (est. 2018, backed by Scout Ventures) are working on developing small data centres on the moon, which could take advantage of abundant solar energy and would be less susceptible to natural disasters.

Space Data Centres: Thales Alenia Space is studying the feasibility of space-based data centres that would run on solar energy.

Provocative Science(est. 2023, backed by Unbounded Capital) is taking a completely alternate route by using the heat generated in data centres to run DAC!

Conclusion and Future Outlook

As the demand for data storage and computational power continues to rise, driven by AI, the need for more and larger data centres will grow exponentially. The energy consumption of these facilities presents a significant challenge, necessitating innovations in efficiency and sustainable practices. Data centres are set to become even more integral to the global digital infrastructure, and the industry must adapt to meet these increasing demands responsibly and sustainably. Even if the AI industry improves data centre energy efficiency by 10%, doubling the number of data centres would still lead to an 80% increase in global carbon emissions.

As a thesis-driven fund, we believe in backing category-defining companies and in the realm of data centres, we see two different futures emerging — one from a short-term perspective and another from a long-term, aspirational standpoint. In the short term, we are particularly interested in novel solutions that leverage a combination of renewables and long-duration energy storage (LDES). Additionally, we believe that making fundamental changes, even if small, can have a significant impact. Examples include hardware solutions that tweak chip architectures or improved cooling systems.From a long-term perspective, we see deep-tech solutions, such as advanced chips designed specifically for the future, as highly promising. The future of AI and computational technology hinges on robust data centre infrastructure. Addressing environmental and operational challenges is crucial. Innovations in renewable energy, cooling technologies, and AI-driven optimisations promise a more sustainable and efficient future for data centres.That said, we strongly believe in the resilience of our species. If data centres are fundamentally required, we will find ways to make them sustainable (case in point — Ethereum, the second-largest cryptocurrency by market cap, has successfully reduced its electricity requirements by an impressive 99%!!)

We’re very excited about this space, and if you’re a founder building in this sector, we extend our warm invitation to engage with us. We’d love to discuss investment opportunities!

TL;DR:

Data centres for the uninitiated: As the world embraces the transformative potential of AI, the demand for computational power is skyrocketing. At the heart of this technological revolution are data centres, which provide the essential infrastructure to fuel AI’s insatiable appetite for data processing and storage. These, sometimes gigantic, facilities are the backbone of the digital age, enabling the flow of information and facilitating the complex calculations that drive not just AI systems but other critical technologies like cloud computing, 5G, IoT, and big data analytics as well.

Challenges: Scaling data centres to meet AI’s demands brings forth various challenges. We deep-dive into these challenges, which include addressing energy consumption needs, water usage, carbon emissions, and managing e-waste.

Solutions: We explore emerging technologies and investment trends that are driving the evolution of data centres towards greater sustainability and efficiency.

Future outlook: We are optimistic about the ‘green’ future of data centers. We provide our perspective on the evolution of data centers in both the short and long term, highlighting the technologies that we find most exciting.

Let us help you decode what Elon Musk meant when he said, “I’ve never seen any technology advance faster than this… the next shortage will be electricity. They won’t be able to find enough electricity to run all the chips. I think next year, you’ll see they just can’t find enough electricity to run all the chips!” or when Sam Altman said that an energy breakthrough is necessary for future artificial intelligence, which will consume vastly more power than people have expected. Let’s dive into what data centres look like today, understand the landscape, and what will be needed to manage their impact on the environment.

Introduction: So What Exactly Are Data Centres & Why Do We Need Them?

Short answer: Data storage and computational power!

Imagine you start an internet company, and it generates data that needs to be saved, processed, and retrieved. Over time, as more data is generated and the number of users increases, your in-house servers can no longer meet the demand. This is where data centres come in. Data centres provide a safe and scalable environment to store large quantities of data, accessible and monitored around the clock. In essence, data centres are concentrations of servers — the industrial equivalent of personal computers — designed to collect, store, process, and distribute vast amounts of data. Numerous such clustered servers are housed inside rooms, buildings, or groups of buildings. They offer several key advantages for businesses: scalability, reliability, security, and efficiency within a single suite.

As you can imagine, the total amount of data generated to date is immense, to the tune of 10.1 zettabytes (ZB) as of 2023! To put this into perspective, assuming one hour of a Netflix movie equals 1GB of data, the total data generated equates to 10.1 trillion hours of viewing or 1.1 billion years!

Data centres are the unsung heroes that provide the infrastructure to store, retrieve and process most of this data.

“Everything on your phone is stored somewhere within four walls.”

(New York Times)

The design and infrastructure of data centres continue to evolve to meet the needs of modern computational and data-intensive tasks. Now, let’s take you inside a data centre.

What’s Inside the Box?

Data centres can differ depending on the type/purpose they’re built for but a data centre is fundamentally comprised of 4 major components: compute, storage, network, and the supporting infrastructure.

Computing Infrastructure

Servers are the engines of the data centre. They perform the critical tasks of processing and managing data. Data centres must use processors best suited for the task at hand; for example, general-purpose CPUs may not be the optimal choice for solving artificial intelligence (AI) and machine learning (ML) problems. Instead, specialised hardware such as GPUs and TPUs are often used to handle these specific workloads efficiently.

Storage Infrastructure

Data centres host large quantities of information, both for their own purposes and for the needs of their customers. Storage infrastructure includes various types of data storage devices such as hard drives (HDDs), solid-state drives (SSDs), and network-attached storage (NAS) systems.

Network Infrastructure

Network equipment includes cabling, switches, routers, and firewalls that connect servers together and to the outside world.

Support Infrastructure

Power Subsystems — uninterruptible power supplies (UPS) and backup generators to ensure continuous operation during power outages.

Cooling Systems — Air conditioning and other cooling mechanisms prevent overheating of equipment. Cooling is essential because high-density server environments generate a significant amount of heat.

Fire Suppression Systems: These systems protect the facility from fire-related damages.

Building Security Systems: Physical security measures, such as surveillance cameras, access controls, and security personnel, safeguard against unauthorized access and physical threats.

AI applications generate far more data than other workloads, necessitating significant data centre capacities. The total amount of data generated is expected to grow to 21 ZB by 2027, driven largely by the adoption of AI! This demand has led to the construction of specialised data centres designed specifically for high-performance computing. These centres are different from traditional ones, as they require more densely clustered and performance-intensive IT infrastructure, generating much more heat and requiring huge amounts of power. Large language models like OpenAI’s GPT-3, for instance, use over 1,300 megawatt-hours (MWh) of electricity to train — roughly 130 US homes’ worth of electricity annually. Traditional data centres are designed with 5 to 10 kW/rack as an average density; whereas those needed for AI require 60 or more kW/rack.

Rack density refers to the amount of computing equipment installed and operated within a single server rack.

Higher rack density exacerbates the cooling demands within data centres because concentrated computing equipment generates substantial heat. Since cooling typically accounts for roughly 40% of an average data centre’s electricity use, operators need innovative solutions to keep the systems cool at low costs.

Market Outlook

At present, there are more than 8,000 data centres globally and it is interesting to note that approximately 33% of these are located in the United States and only about 16% in Europe.

As discussed, the surge in AI applications underscores the urgency for data centres to accommodate AI’s hefty compute requirements. Industry experts project a need for approximately 3,000 new data centres across Europe by 2025. They also anticipate global data centre capital expenditure to exceed US$500 billion by 2027, primarily driven by AI infrastructure demands. That translates to a stress on energy infrastructure with energy companies increasingly citing AI power consumption as a leading contributor to new demand. For instance, a major utility company recently noted that data centres could comprise up to 7.5% of total U.S. electricity consumption by 2030!

While AI is a major driver of this demand, the following are other key drivers

Cloud infrastructure — The shift towards cloud computing by businesses of all sizes with reducing costs

5G and IoT Expansion contribute to massive amounts of data that need processing and storage, further fuelling the need for data centres

Regulatory Requirements — Data privacy and security regulations are compelling organisations to invest in secure and compliant data storage solutions

While the types of data centres are beyond the scope of this article, here are a few basics to understand the value chain

Value chain: The beneficiaries of the boom in data centers are many with Big Tech being a major one

In the next five years, consumers and businesses are expected to generate twice as much data as all the data created over the past ten years!

Soo…. What’s the problem?

The hidden cost of AI and opportunities

Despite their benefits, there are costs associated with running massive data centres — significant costs — environmental costs!! We have divided these impacts into 4 key dimensions: Electricity, Water, Emissions and lastly E-waste.

Electricity

According to the IEA report, data centre electricity use in Ireland has more than tripled since 2015, accounting for 18% of total electricity consumption in 2022. In Denmark, electricity usage from data centres is forecast to grow from 1% to 15% of total consumption by 2030

As mentioned, running servers consumes substantial electricity. Data centres accounted for 2% of global electricity use in 2022 according to the IEA. In the US alone, the electricity consumption due to data centres in 2022 was 4% of the total demand and it is expected to grow to about 6% of total demand to up to 260TWh by 2026.

To emphasise on AI’s impact on the electricity demand, it is important to point out that not all queries are made equal! Generative AI queries consume energy at 4 or 5 times the magnitude of a typical search engine request. For example, a typical Google search requires 0.3 Wh of electricity, whereas OpenAI’s ChatGPT requires 2.9 Wh per request. With 9 billion searches daily, this could require almost 10 TWh of additional electricity annually!

Cooling servers also demands significant energy. It is alarming to note that cloud computing players often do not report water consumption. The split of energy usage within data centres has traditionally been — 40% consumed by computing activities, another 40% is used for cooling purposes, and the remaining 20% is used for running other IT infrastructure. These percentages highlight the major areas that need improvement. Depending on the pace of deployment, efficiency improvements, and trends in AI and cryptocurrency, the global electricity consumption of data centres is expected to range between 620–1,050 TWh by 2026, up from 460 TWh in 2022.

The sudden boom in the cryptocurrency space over the past 5 years has resulted in significant resources being diverted to mining

The share of electricity demand from data centers, as a percentage of total electricity demand, is estimated to increase by an average of one percentage point in major economies

Water

In the US alone, data centres consume up to 1.7 billion litres of water per day for cooling. Training GPT-3 in Microsoft’s data centres, for example, can consume a total of 700,000 litres of clean freshwater. And while most data centres do not release data about their water consumption requirements, conventional data centres can consume significant potable water annually, with an example figure being 464,242,900 litres. Water consumption has increased by more than 60% in the last four years in some major firms. The median water footprint for internet use is estimated at 0.74 litres per GB, underscoring the relevance of water consumption!

Carbon Emissions

The data centre industry accounts for roughly 2% of global greenhouse gas emissions. The IT sector, including data centres, accounts for about 3–4% of global carbon emissions, about twice as much as the aviation industry’s share. And just for perspective on what a future where AI permeates into all walks of life holds, it is critical to note that while we have come a long way and progress is being made each day, training a single deep-learning model can emit up to 284,000 kg of CO2, equivalent to the lifetime emissions of five cars, while training earlier chatbot models such as GPT-3 led to the production of 500 metric tons of greenhouse gas emissions — equivalent to about 1 million miles driven by a conventional gasoline-powered vehicle. The model required more than 1,200 MWh during the training phase — roughly the amount of energy used in a million American homes in one hour. It is safe to assume that future iterations have even greater needs and generate higher carbon emissions.

The diverse nature of AI workloads adds complexity. While training demands cost efficiency and less redundancy, inference requires low latency and robust connectivity. The carbon footprint of text queries is the lowest among all GenAI tasks. Food for thought for the next time you generate images for fun😬

E-waste

Rapid hardware obsolescence leads to substantial electronic waste. Global e-waste reached 53.6 million metric tons in 2019, with data centres contributing significantly. The primary reason is that the lifetime of the equipment impacts the frequency of replacements. Servers typically get replaced every 3 to 5 years, leading to spikes in emissions.

The environmental impact of data centres, particularly in the context of increasing AI and computational demands, is substantial. Addressing these challenges requires a multifaceted approach, including technological innovation, regulatory measures, and collaborative efforts. As the digital world continues to expand, the importance of sustainable practices in data centre operations becomes ever more critical. Governments are implementing measures to regulate data centre growth. For example, Singapore has imposed a temporary moratorium on new data centre construction in certain regions. Collaboration between data centre operators, real estate developers, governments, and technology providers is crucial for developing future-ready, energy-efficient, and environmentally responsible data centre infrastructure.

Areas of Innovation and The Frontrunners

Addressing the varying requirements for AI necessitates innovative hybrid solutions that combine traditional and AI-optimised designs to future-proof data centres.

We have clubbed the startups and solutions into 5 major categories -

Software and Infrastructure Solutions

Enhanced AI algorithms enable more impactful dynamic resource allocation to optimize energy management in real-time. Software solutions allow operators to temporarily shift power loads with carbon-aware models that relocate data centre workloads to regions with lower carbon intensity at selected times. It comes as no surprise that companies like Nvidia are developing AI-driven solutions to optimise data centre operations that can significantly reduce energy consumption. Future Facilities Ltd(acquired by Cadence), is using AI and “digital twin” technology to help organisations design more energy-efficient systems and Paris-based FlexAI(est. 2023), that recently came out of stealth and raised a $30M seed round(investors include Elaia Partners, First Capital and Partech), is developing operating systems to help AI developers design systems that achieve more performance with less energy usage. Coolgradient (est. 2021, backed by 4impact Capital) is developing intelligent data centre optimization software to help reduce energy consumption and increase efficiency.

Federated learning is a decentralized approach to training machine learning models across multiple devices or servers holding data samples, without directly exchanging the raw data itself. Unlike traditional centralized machine learning where all data is aggregated on a central server for model training, federated learning keeps the data distributed and trains models locally on each device or server. A central server periodically aggregates the model updates from the distributed clients to form a global model. Companies like Bitfount are developing federated platforms that enable this decentralized collaborative training of AI models without compromising data privacy. Their technology allows algorithms to travel to datasets instead of raw data being shared. This federated approach distributes the compute load across many devices and can significantly reduce the overall energy footprint compared to centralized training in the cloud.

Advanced Hardware Solutions

It’s not just the design of data centres that can be made more energy-efficient. There seems to be a gap in innovation on the chip side as well(for AI use specifically) partly because the processors that are used for AI training — GPUs — were originally designed for a different use-case: real-time rendering of computer-generated graphics in video games.

Addressing the challenge of improving energy efficiency, UK-based Lightmatter(est. 2017, investors include Sequoia and Hewlett Packard Pathfinder) is developing new chips using photonics technology to build processors specifically for AI development, which use less energy than traditional GPUs. Companies like Lumai, Lumiphase and Xscape Photonics(est. 2022, backed by Life Extention Ventures) are working on similar innovations. Celestial AI(est. 2020, backed by major funds such as Engine Ventures), to serve AI and machine learning applications, has developed a “photonic fabric” technology that could increase chiplet efficiency and helps push the limits of traditional data centres.

Addressing the problem of achieving more performance output for every watt of input electricity from a memory compression standpoint, a German startup ZeroPoint Technologies (est 2015, backed by Matterwave Ventures and Climentum) has proven that its technology effectuates a 25% reduction in cost of ownership for datacenter players via a massive decrease for electricity need — and hence CO2 emissions.

Cooling technologies such as direct-to-chip and liquid cooling are gaining traction as efficient ways to dissipate heat and reduce energy consumption. Impact Cooling(est. 2022, backed by Impact Science Ventures) uses air jet impingement for heat transfer to reduce energy consumption and carbon footprint. Innovations such as rear-door heat exchangers are being adopted to address high-density environments. Startups like Submer (est. 2015, investors include Norrsken VC, Planet First Partners and Mundi Ventures), by leveraging on immersion cooling technology, offer solutions that can reduce cooling energy consumption by up to 95%.

Taalas (est. 2023, backed by Quiet Capital and Pierre Lamond) is developing a platform that turns AI models into custom silicon — hence creating a ‘Hardocre Model’ which results in 1000x efficiency improvement over its software counterpart.

Alternate power-sourcing solutions

Nuclear Power: Plans for nuclear-powered data centres, utilising small modular reactors (SMRs), are underway, with commissioning dates envisaged for 2030. Microsoft and Amazon are already integrating nuclear power into their data centres. Microsoft’s partnership with Constellation Energy to supply one of its data centres with nuclear power is one such example.

Green-hydrogen: ECL, a California-based startup(backed by Molex and Hyperwise Ventures) that develops modular cost-effective green-hydrogen-powered data centres, recently raised their Seed round to develop their first zero-emission data centre

Wind & Solar: Companies are now building data centres closer to renewable energy sources, such as wind and solar farms. WindCORES, a brand of the German wind energy company WestfalenWIND has placed data centres inside (quite literally!) windmills to have access to clean energy! Exowatt, a Paris-based startup that raised a whopping $20M series-A last month (investors including Andreessen Horowitz, Atomic Labs and Sam Altman), is using thermal energy collection+storage solutions to run green data centres

Data centre operators are also exploring alternative power-sourcing strategies such as natural gas. For example, Lowercarbon Capital-backed Crusoe uses natural gas that would otherwise be released into the atmosphere, to power its data centres.

‘Out of the World’ Solutions

Underwater Data Centres: Startups like Network Ocean(est. 2023, backed by Climate Capital), Orca X and Subsea Cloud are developing data centres that are submerged in water to leverage natural cooling.

Lunar Data Centres: Startups like Lonestar (est. 2018, backed by Scout Ventures) are working on developing small data centres on the moon, which could take advantage of abundant solar energy and would be less susceptible to natural disasters.

Space Data Centres: Thales Alenia Space is studying the feasibility of space-based data centres that would run on solar energy.

Provocative Science(est. 2023, backed by Unbounded Capital) is taking a completely alternate route by using the heat generated in data centres to run DAC!

Conclusion and Future Outlook

As the demand for data storage and computational power continues to rise, driven by AI, the need for more and larger data centres will grow exponentially. The energy consumption of these facilities presents a significant challenge, necessitating innovations in efficiency and sustainable practices. Data centres are set to become even more integral to the global digital infrastructure, and the industry must adapt to meet these increasing demands responsibly and sustainably. Even if the AI industry improves data centre energy efficiency by 10%, doubling the number of data centres would still lead to an 80% increase in global carbon emissions.

As a thesis-driven fund, we believe in backing category-defining companies and in the realm of data centres, we see two different futures emerging — one from a short-term perspective and another from a long-term, aspirational standpoint. In the short term, we are particularly interested in novel solutions that leverage a combination of renewables and long-duration energy storage (LDES). Additionally, we believe that making fundamental changes, even if small, can have a significant impact. Examples include hardware solutions that tweak chip architectures or improved cooling systems.From a long-term perspective, we see deep-tech solutions, such as advanced chips designed specifically for the future, as highly promising. The future of AI and computational technology hinges on robust data centre infrastructure. Addressing environmental and operational challenges is crucial. Innovations in renewable energy, cooling technologies, and AI-driven optimisations promise a more sustainable and efficient future for data centres.That said, we strongly believe in the resilience of our species. If data centres are fundamentally required, we will find ways to make them sustainable (case in point — Ethereum, the second-largest cryptocurrency by market cap, has successfully reduced its electricity requirements by an impressive 99%!!)

We’re very excited about this space, and if you’re a founder building in this sector, we extend our warm invitation to engage with us. We’d love to discuss investment opportunities!